Stream Claude answers in real-time with PHP

Since the release of Claude 3, the AI model has boomed in popularity and became a serious alternative to ChatGPT. The quality of its reasoning and the relevance of its answers made it a perfect choice as an engine to drive an AI powered application forward.

Credits: DALL-E

Credits: DALL-E

For dealing with artificial intelligence, the programming languages of choice are Python and Javascript. Tools, SDKs and such are often made first using those then ported to other platforms. Back when I started developing AI applications, I was often tasked with improving PHP websites or applications by powering them with artificial intelligence. Unfortunately, resources, examples and documents are often hard to find and LLM providers don't always offer appropriate tools to deal with the reality we often face. This is why I've decided to write my own series of articles about mixing PHP with major LLMs on the market.

Here, let's talk about Anthropic, specifically their newest AI model called Claude3. They have positioned themselves as one of the top LLM providers on the market and should be considered when picking the right AI model for what you need to accomplish.

One of the key features I want to cover in this article is the real-time message streaming, where a prompt is sent to Claude and each generated text chunk is replied in real-time in a stream until it finishes its answer. You are probably wondering, what's the big deal? The thing is that while LLMs are processing an answer, it can take a lot of time. Sometimes, it can be significant, 10, 15, 20 or even 30+ seconds. This is why having the user wait the whole time is a bad idea. It breaks the user experience and makes the app or website look unoptimized. The good thing with streams is that you receive parts of the answer in real-time, the moment they are generated by the AI model. This means, you can display on screen, word by word, the reply while it's being generated. Not only does it keep the users busy as they read the chunks when they come in, but it also gives the impression that you are discussing with a living being. This change in user experience can make a critical difference between a good and a great product.

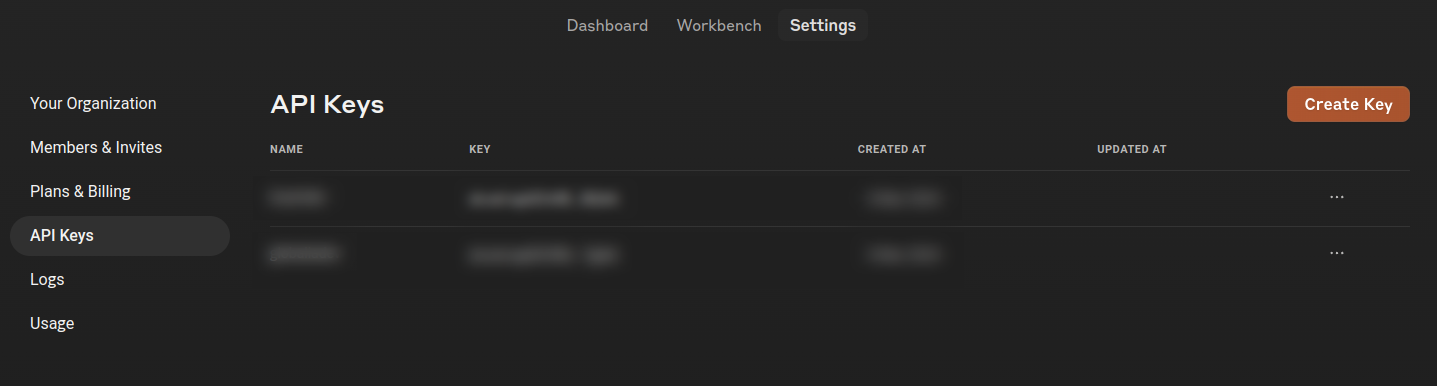

Without further ado, let's dive in. The following example connects directly to Anthropic's REST API using PHP streams. First step is to create an account and gain access to the API. This is done through a paid account (at the time of the writing of this article, no free account could access the API). Once created, navigate to the API keys section and create a new key.

Copy and paste that key in your code or environment file and let's start coding. Let's first find something to ask Claude. Something long enough to have it streamed in chunks.

$message = "What are the best spots for tourists in Montreal?";Montreal is a fantastic city and I would love to know what Claude has to say about it.

To get an answer, we will need to communicate through the Anthropic messages endpoint.

Next, let's prepare the headers to send with the request. This is where you set your Anthropic API key. For this example, I've set the key as an environment variable which I retrieve using the getenv PHP function.

$url = "https://api.anthropic.com/v1/messages";

$headers = array(

"x-api-key: " . getenv('YOUR_API_KEY'),

"content-type: application/json",

"anthropic-version: 2023-06-01"

);We also need to prepare the body of the request. This is where we ask our question, define which Claude model we want to target and other useful parameters. It's important to note here that the value of the max_tokens parameter represents the amount of token after which Claude stops generating an answer. Here is set as 1024 which means that if an answer takes longer than 1024 tokens to write, it will be cut before it's done.

It's also important to note the addition of the stream parameter. If set to true, the message will be split into chunks and sent through a stream.

$body = array(

"model" => 'claude-3-sonnet-20240229',

"max_tokens" => 1024, //Claude stops generating text when the number of output tokens reaches this value.

"stream" => true,

"messages" => array(

array(

"role" => "user",

"content" => $message

)

)

);To generate a quicker (and less costly answer) I've picked the Claude 3 Sonnet model. If you need a more deep answer, or even something quicker, feel free to try the different Claude 3 models available.

When creating a discussion, LLMs like Claude or ChatGPT are shaped to create dialogs between two speakers. This is why you need to create a message structure as shown above in the body of your request. By doing so, you tell Claude the user said something and is waiting for an answer. Each AI provider has their own structure and standards which will help you trace back earlier messages by parsing through the data.

The messages API expects a POST request.

$context = stream_context_create(

[

'http' => [

'method' => 'POST',

'header' => $headers,

'content' => json_encode($body),

],

]

);

$response = fopen($url, 'r', false, $context);

if ($response === false) {

echo "Couldn't connect to the API

";

exit;

}To establish a stream for recovering chunks, PHP has a native function called stream_context_create. When mixed with fopen, It lets us connect to the API endpoint while keeping the connection open and read for incoming data chunks. The stream is set as readonly (as shown by the r parameter) and starts at the beginning.

What's left is to read and parse the incoming chunks. It can be done by looping through reads until it finds an EOF (end of file).

$dataExtractionRegex = '/data: (.*)/';

$answer = '';

$outputTokens = 0;

while (!feof($response)) {

$chunk = fgets($response);

if ($chunk !== false) {

//Since Claude3 are sending data chunks with extra information,

//we need to extract the data from the chunk. To do this, we use a regex.

preg_match_all($dataExtractionRegex, $chunk, $matches);

if (!empty($matches[1])) {

//The data is formatted as a JSON object, so we can decode it to an associative array.

$data = json_decode(trim($matches[1][0]), true);

if ($data !== false) {

if ($data['type'] === 'content_block_delta') {

//The content block delta contains the text generated by the model.

$answer .= $data['delta']['text'];

echo $data['delta']['text'];

} else if ($data['type'] === 'message_delta') {

//The message delta contains the stop reason and the number of output tokens.

$outputTokens += $data['usage']['output_tokens'];

}

}

}

}

}

echo "

Output tokens: $outputTokens

";Data received through the chunks are formatted like this:

data: <JSON>

A regular expression is then used to extract the JSON and decode it into an array. Since the chunks are similar, a simple substring could also be a method of choice.

Once the JSON data has been properly decoded, the delta text gets appended to what's on screen by using the echo function. When the stream is done, the amount of token it took to generate the answer is displayed to the user.

Closing thoughts

It's a pretty basic example to demonstrate how streaming could be achieved between a PHP website or application and Anthropic's Claude LLM. Feel free to adjust it to your own needs but keep in mind the PHP process reading from the stream gets busy for the duration of the message. It could be a great idea to mitigate the problem by storing the chunks in a cache engine while processes consume them in real time.

Streams give more control to developers in order to design better experiences. They could create a feeling of discussing with a living being thus increasing the quality of your product.

Possibilities are endless but remember, creativity is the key.

Disclaimer: No AI models were used in the writing of this article. The text content was purely written by hand by its author. Human generated content still has its place in the world and must continue to live on. Only the image was generated using an AI model.