Enhance your ChatGPT prompt with Pinecone and PHP

When developing a new AI powered application, the need to provide external knowledge to AI models is often required. They certainly have their own knowledge, which they have been trained on, but sometimes, the information they need to process the right answer might be hidden from public knowledge or situational to the task at hand. In those cases, relevant data needs to be provided with the user’s query to increase your chances of success.

Credits: DALL-E

Credits: DALL-E

With usage cost and complexity of prompts, it's crucial to pick the right data to send at the right moment. The task is easier said than done. More often than not, traditional searching methods cannot be used. User's don't type specific keywords in their query or what they ask is vague about a general subject. To answer those problematic situations, a new way of doing things has emerged and became widely accessible in recent years. The approach is called Word embedding. The concept is to convert a text (words and phrases) into vectors by attributing a meaning to the words. This means that phrases like:

The tiger is running towards the rabbit where the frog lies in wait.

and

The rabbit is running towards the frog where the tiger lies in wait.

contain the exact same words but have totally different meanings. By embedding their signification, it'll result in different vectors.

Converting meaning into numbers is great, but what can we do with it? The answer is Vector databases. As the name implies, this new type of database engine is optimized to store and manipulate vectors. One of such engines is Pinecone. It provides a place where you can send your generated vectors and perform search calculations over them. With each matched record, it sends you a relevancy score which you can use to gauge how much confidence should be found in those newly found vectors.

Let's take a look at how to implement this concept in PHP. First of all, to properly implement the following demonstration on your own, you need those two things:

- An account to Pinecone. A free account can be made to test things out

- A paid account to OpenAI. In order to interact with AI models through the API, you need a paid account with a valid OpenAI API key.

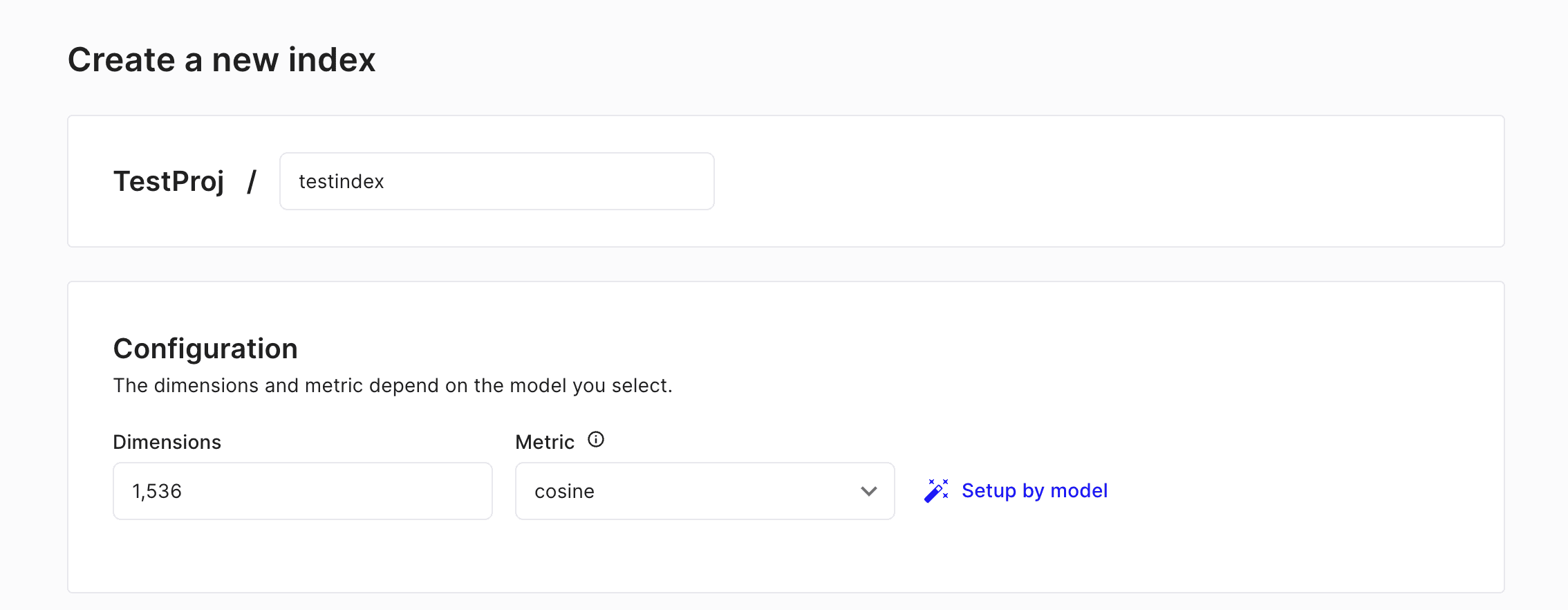

In your Pinecone account, create a new index with a vector dimension of 1536 using cosine metric. This means that the vectors we are pushing to this index are expected to be 1536 numbers long and the engine will use the cosine formula to calculate similarity between them.

When created, you will need to copy the Index URL and your API key inside your code to make it work.

The first step is to implement a way to convert text into vectors. For this, we will query a text embedding model called text-embedding-3-small provided by OpenAI. This model has been trained and optimized to perform word embeddings on a large scale.

Before we begin, let's use Composer to download and import the OpenAI PHP SDK into our new project.

composer require openai-php/clientThen let's create a new OpenAiAdapter class where we will put our interaction logic with OpenAI.

namespace MyGreatApp;

use OpenAI;

class OpenAiAdapter

{

//…

public function convertToVector(string $textContent): array|null

{

//(1)We get our API key from the environment and (2)initialize the OpenAI client

$yourApiKey = getenv('YOUR_API_KEY');

$client = OpenAI::client($yourApiKey);

//(3)We call the embeddings endpoint with the requested text to convert

$response = $client->embeddings()->create([

'model' => 'text-embedding-3-small',

'input' => $textContent

]);

//(4)Finally we parse the response from OpenAI and return the newly created vector

foreach($response->embeddings as $embedding){

return $embedding->embedding;

}

return null;

}

//…

}The above function does the following:

- It gets the API key from an environment variable initially loaded by the app logic

- It creates a client connecting to OpenAI and ready to be used to communicate with AI models

- It sends a word embedding request and ask for a text content to be converted into vectors

- We take a look at the response sent by OpenAI. If we indeed get a vector, we return it. Otherwise we return null to signify to the caller that something wrong happened. Feel free to adjust this logic to your current needs

With that in hand, let's create a library of texts taken from a book available in the public domain. For the good of this example, I took paragraphs from The Sea Horror written by Edmond Hamilton. I picked two unrelated paragraphs and copied them in the project.

//…

$library = [

'sea_horror_page_1_paragraph_2' => [

'metadata' => [

'author' => 'Edmond Hamilton',

'title' => 'The sea horror',

'page' => 1,

'paragraph' => 2,

],

'textContent' => "It is with Clinton himself that the beginning lies, and with the expedition

which bore his name. Dr. Herbert Clinton, holder of the chair of marine zoology

at the University of London, was generally conceded to be the foremost expert on

deep-sea life in all the British Isles. For a score of years, indeed, his fame

had risen steadily as a result of his additions to scientific knowledge. He had

been the one to prove first the connection between the absence of ultra-violet

rays and the strange phosphorescence of certain forms of bathic life. He had, in

his famous Indian Ocean trawlings, established the significance of the

quantities of foraminiferal ooze found on the scarps of that sea's bottom. And

he it was who had annihilated for all time the long-disputed Kempner-Stoll

theory by his brilliant new classification of ascidian forms."

],

'sea_horror_page_2_paragraph_8' => [

'metadata' => [

'author' => 'Edmond Hamilton',

'title' => 'The sea horror',

'page' => 2,

'paragraph' => 8,

],

'textContent' => "Concerning the strange last message from the K-16 there was still discussion,

but even that was capable of more than one explanation. It was pointed out that

Clinton was an ardent zoologist, and that the discovery of some entirely new

form might have caused the exaggerated language of his message. Stevens, who

knew the calm and precise mentality of his superior rather better than that,

would not believe in such an explanation, but was unable to devise a better one

to fit that sensational last report. It was the general belief, therefore, that

in his excitement over some new discovery Clinton had ordered his submarine to a

depth too great, and had met disaster and death there beneath the terrific

pressure of the waters. Certainly, whatever its defects, there was no other

theory that fitted the known facts so well."

]

];As you can see, the second paragraph of the first page and the eighth paragraph of the second page have been pasted here. They are unrelated and are talking about two different things. One is describing Dr Herbert Clinton's expertise and why he's well-known. The other is about the K-16 tragedy that happened at the bottom of the ocean.

Let's try converting the first one into a vector.

use MyGreatAppOpenAiAdapter;

//..

$openAiAdapter = new OpenAiAdapter();

$vector = $openAiAdapter->convertToVector($library['sea_horror_page_1_paragraph_2']['textContent']);

var_dump($vector);

//[0.033571497, 0.02830538, 0.052743454, 0.0906211, 0.020406203, … , -0.026865426]The response we get from OpenAI is a very long array of numbers representing the meaning of our first excerpt in a mathematical way. This is great! Our next step would be to send this vector to our newly created Pinecone index. To do so, let's create a PineconeAdapter where we will put our interaction logic with Pinecone.

namespace MyGreatApp;

class PineconeAdapter

{

//…

public function pushToPinecone(array $vector, string $id, array $metadata): bool

{

//(1)Fetching the key and index url to connect to Pinecone

$yourApiKey = getenv('YOUR_PINECONE_KEY');

$url = getenv('YOUR_PINECONE_INDEX').'/vectors/upsert';

//(2)Assembling the request body to send to Pinecone

$body = json_encode([

'namespace' => 'my_namespace_name',

'vectors' => [

[

'id' => $id,

'values' => $vector,

'metadata' => $metadata

]

]

]);

//(3)Communicates with the REST API endpoint with a curl request

$curl = curl_init($url);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_CUSTOMREQUEST, 'POST');

curl_setopt($curl, CURLOPT_POSTFIELDS, $body);

curl_setopt($curl, CURLOPT_HTTPHEADER, [

'Content-Type: application/json',

'Api-Key: '.$yourApiKey,

'Content-Length: ' . strlen($body)

]);

$response = curl_exec($curl);

curl_close($curl);

//(4)Checks if the response specifies at least 1 vector was upserted

$count = $response ? json_decode($response)->upsertedCount : 0;

return $count > 0;

}

//…

}In the above example, we are communicating with Pinecone REST API through the Upsert Vectors endpoint. This endpoint is in charge of writing vector data into the specified index. With each vector, you can attach metadata that can be returned when searching for records. As a general rule, it's a good practice to add relevant metadata that will help you process the record once found. It can provide you information you would otherwise need to execute other processes, thus potentially reducing load and increasing speed.

The above function does the following:

- Retrieves the API key and the index URL from environment variables initially loaded by the app logic

- Generates the body of the request to send to Pinecone

- Use cURL to send a POST request to the REST API endpoint. It adds the API key to the header as requested by Pinecone

- Parses the response and checks if at least 1 record was counted as upserted in the operation. If so, it's considered a success. Feel free to implement your own validation logic based on your needs.

Let's come back to our library and send all the vectors into our index:

use MyGreatAppOpenAiAdapter;

use MyGreatAppPineconeAdapter;

//..

$openAiAdapter = new OpenAiAdapter();

$pineconeAdapter = new PineconeAdapter();

foreach($library as $id => $data)

{

$vector = $openAiAdapter->convertToVector($data['textContent']);

$pineconeAdapter->pushToPinecone(

$vector,

$id,

$data['metadata']

);

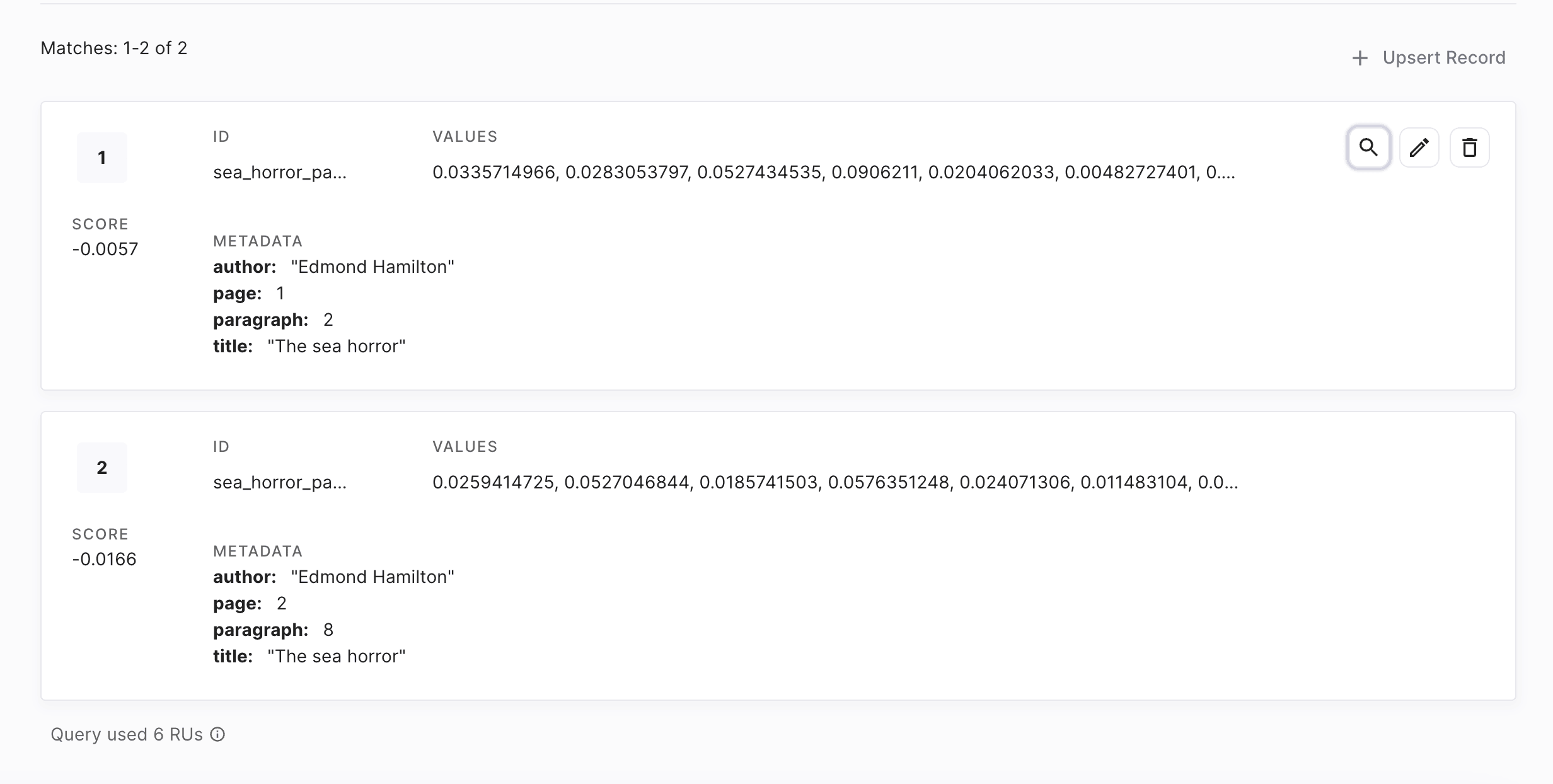

}Now if we refresh our Pinecone dashboard and take a look, we should be able to see the two paragraphs and their corresponding vectors.

Great! We officially have a working vector database filled with knowledge about the book. The only last remaining piece is how do we search those vectors? For the sake of this demonstration, let's pretend we are building an app that uses ChatGPT to answer user questions about the book. To do so, we need to use the questions and use them as a search query to find matching results.

Let's reopen our PineconeAdapter and add a new function to search for vectors.

namespace MyGreatApp;

class PineconeAdapter

{

//…

public function pushToPinecone(array $vector, string $id, array $metadata): bool

{

//…

}

public function searchForVector(array $queryVector): array|null

{

//(1)Fetching the key and index url to connect to Pinecone

$yourApiKey = getenv('YOUR_PINECONE_KEY');

$url = getenv('YOUR_PINECONE_INDEX').'/query';

//(2)Assembling the request body to send to Pinecone

$body = json_encode([

'namespace' => 'my_namespace_name',

'topK' => 1, //We only want 1 record returned

'includeValues' => false, //No need to get the full vector in the response

'includeMetadata' => true, //Yes we want the metadata associated with the vector

'vector' => $queryVector

]);

//(3)Communicates with the REST API endpoint with a curl request

$curl = curl_init($url);

curl_setopt($curl, CURLOPT_RETURNTRANSFER, true);

curl_setopt($curl, CURLOPT_CUSTOMREQUEST, 'POST');

curl_setopt($curl, CURLOPT_POSTFIELDS, $body);

curl_setopt($curl, CURLOPT_HTTPHEADER, [

'Content-Type: application/json',

'Api-Key: '.$yourApiKey,

'Content-Length: ' . strlen($body)

]);

$response = curl_exec($curl);

curl_close($curl);

//(4)Parses the records found and returns the top one

$response = json_decode($response);

$found = null;

if (!empty($response->matches)) {

$found = [

'id' => $response->matches[0]->id,

'metadata' => $response->matches[0]->metadata

];

}

return $found;

}

}The above function does the following:

- Retrieves the API key and the index URL from environment variables initially loaded by the app logic

- Generates the body of the request to send to Pinecone. Take note that we only ask to have 1 record returned by setting the topK parameter to 1.

- Use cURL to send a POST request to the REST API endpoint. It adds the API key to the header as requested by Pinecone

- Parses the response and checks which record was found. In this example, we return it regardless of its relevance score, but logic can be applied as to whether we should discard it if we cannot have enough confidence in how relevant it is.

Let's try our search logic by asking a question where no relevant keywords are present but are meaningful to the content of the second paragraph.

use MyGreatAppOpenAiAdapter;

use MyGreatAppPineconeAdapter;

//..

$openAiAdapter = new OpenAiAdapter();

$pineconeAdapter = new PineconeAdapter();

//…

$question = "Why tragedy occured in the latest trip?";

//It is important to convert the text query into vector before

//asking for a search as Pinecone doesn't provide a word embedding service

$vector = $openAiAdapter->convertToVector($question);

$found = $pineconeAdapter->searchForVector($vector);

//Outputs the paragraph with the id sea_horror_page_2_paragraph_8It works! Only the second paragraph talks about a tragedy that happened during the most recent discovery. The first one is mostly describing the character and why he is famous and known for. As expected, Pinecone suggests the second paragraph as the most relevant one based on our query.

With this, we can finalize the whole flow by implementing the ask ChatGPT logic in the OpenAiAdapter where we can send the user's question to get an answer to display.

namespace MyGreatApp;

use OpenAI;

class OpenAiAdapter

{

//…

public function convertToVector(string $textContent): array|null

{

//..

}

public function askQuestion(string $prompt): string|null

{

$yourApiKey = getenv('YOUR_API_KEY');

$client = OpenAI::client($yourApiKey);

$response = $client->chat()->create([

'model' => 'gpt-4-turbo',

'messages' => [

['role' => 'user', 'content' => $prompt],

],

]);

foreach ($response->choices as $result) {

if ($result->message->role == 'assistant'){

return $result->message->content;

}

}

return null;

}

//…

}Let's test it by creating a prompt template where we inject the question and the result of the knowledge search through the vector database.

use MyGreatAppOpenAiAdapter;

use MyGreatAppPineconeAdapter;

//..

$openAiAdapter = new OpenAiAdapter();

$pineconeAdapter = new PineconeAdapter();

//…

$question = "Why tragedy occured in the latest trip?";

//It is important to convert the text query into vector before

//asking for a search as Pinecone doesn't provide a word embedding service

$vector = $openAiAdapter->convertToVector($question);

$found = $pineconeAdapter->searchForVector($vector);

$prompt = "You are a famous writer and readers are in the middle

of a Q&A session where you answer about your latest book.

Here is a relevant passage from the book to use to answer the question: \"{EXCERPT}\"

The question you are asked is: \"{QUESTION}\"";

$customPrompt = str_replace(

['{EXCERPT}', '{QUESTION}'],

[$library[$found['id']]['textContent'], $question],

$prompt

);

echo $openAiAdapter->askQuestion($customPrompt);The AI model gave me this response which answers perfectly the user's question:

The tragedy in the latest trip, as can be inferred from the passage, likely occurred due to a combination of human error and the extreme conditions under which the characters were operating. Clinton, driven by his passion as a zoologist, possibly discovered a new marine species that excited him tremendously. This excitement, perhaps clouding his usual judgment, might have led him to push the submarine, the K-16, beyond its safe operational limits to either observe or collect samples of this new discovery. Consequently, it is implied that the submarine may have descended to a depth that was too great, resulting in it being unable to withstand the immense pressure of the deep waters, leading to its catastrophic failure and the tragic loss of lives. Despite alternative theories, this explanation aligns best with Clinton's character traits and the circumstances leading to the last known communication from the submarine.

Closing thoughts

By injecting your own knowledge to your prompt can drastically increase the quality of the answer generated by AI models. You give them the data they need to properly answer your request. It can also be a good measure to counter hallucinations as by providing the working material, they are less keen to use their own knowledge and hallucinate about the subject at hand.

Possibilities are endless but remember, creativity is the key.

Disclaimer: No AI models were used in the writing of this article. The text content was purely written by hand by its author. Human generated content still has its place in the world and must continue to live on. Only the image was generated using an AI model.